May 30, 2022

An Introduction to Caching Patterns

A cache is a software component that stores a subset of transient data so that future requests for that data can be served faster.

In short, it can be thought of as a high-speed data storage layer, whose primary purpose is to improve the performance of an application by reducing the need to access the persistent memory or unnecessarily recomputing data.

The idea is that whenever processing a new request, the result of that request is stored into the cache memory so it can be retrieved and reused when the same request runs again, which ultimately helps reducing the network overhead, the CPU usage and by extension the overall infrastructure cost.

Caching patterns

Cache-aside

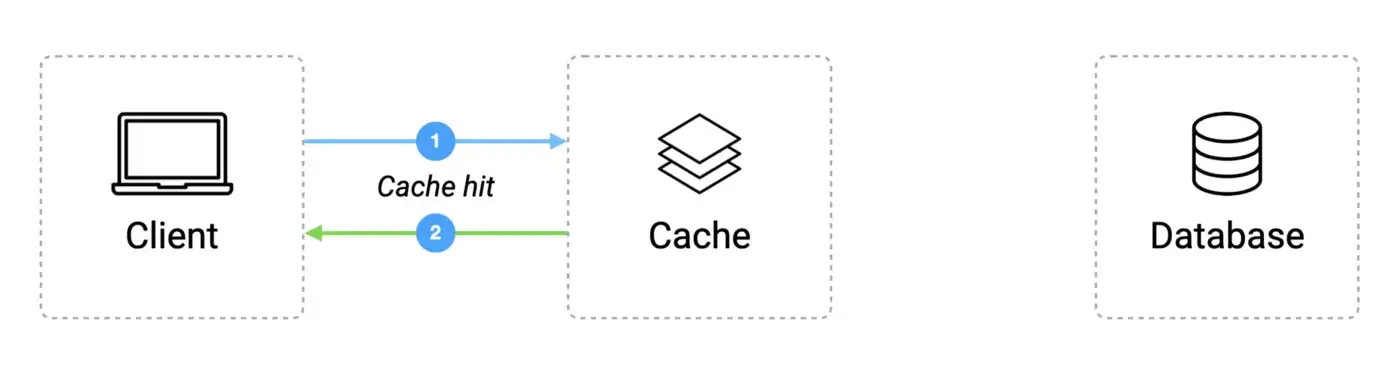

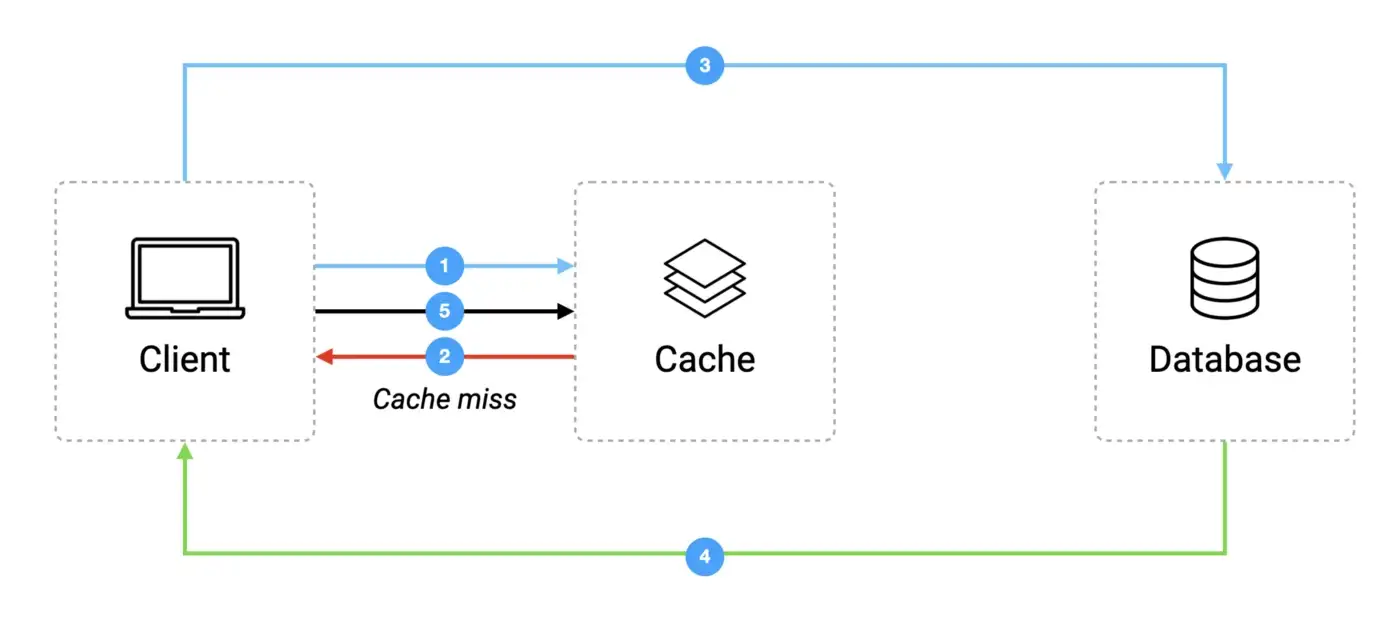

The cache-aside pattern is when the application first request the data from the cache.

If the data exists into the cache it is directly sent back to the application and this event is called a cache hit.

On the contrary if the data is missing, this event being called a cache miss, the application will send another request to the database and write it to the cache so that the data can be retrieved from the cache again next time.

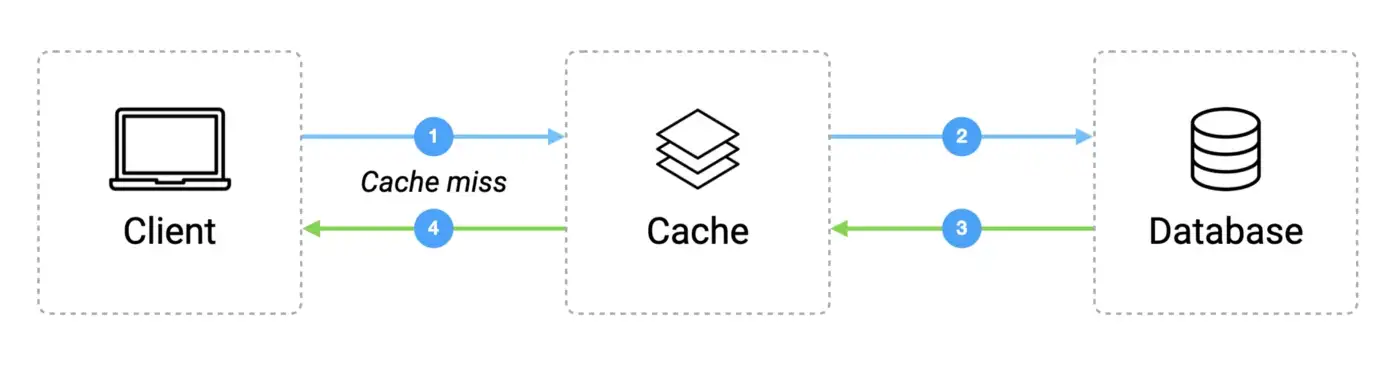

Read-Through (Lazy Loading)

The read-through or lazy loading pattern is quite similar with the difference that when a cache miss happens, it’s not the application but the cache itself that pulls the data from the database and stores it before sending it back to the application.

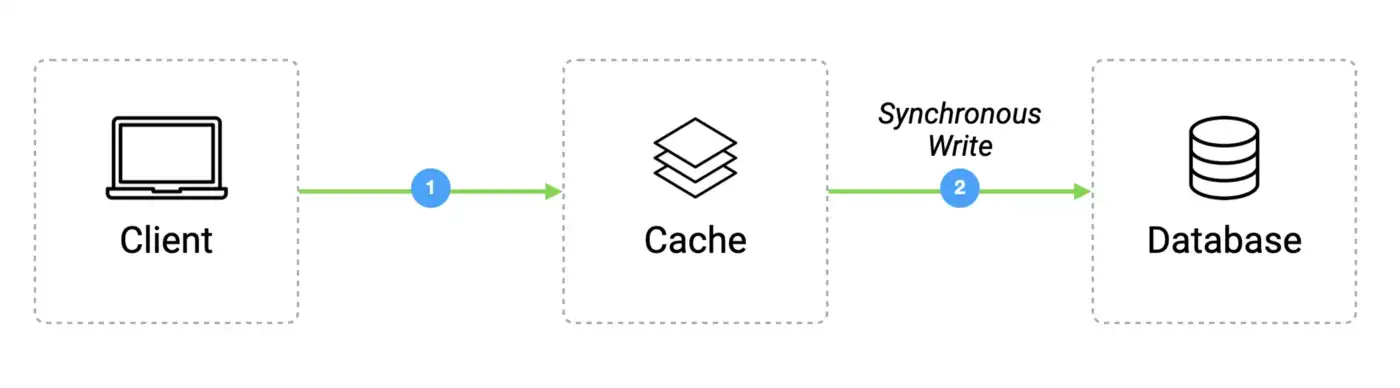

Write-Through

In the case of the write-through pattern, when the application sends a data to be stored, the cache captures the data and synchronously writes it both in its memory and in the database.

This pattern is often used when there are no frequent writes and is quite helpful in regards to data recovery and consistency but introduces latency as we have to write to two different locations.

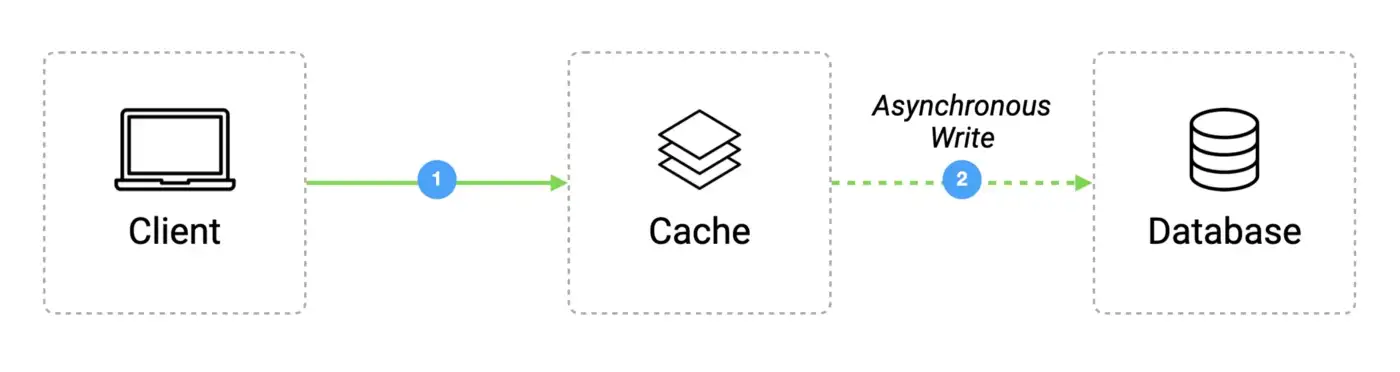

Write-Behind

Finally, the write-behind caching pattern takes a similar approach to the write-through pattern, with the exception that writing to the main database is done asynchronously.

But because the data stored in the cache it is volatile, there is obviously a risk of data loss if the cache goes down.

Fortunately, some advanced caching solutions like Redis now provide a failsafe to mitigate data loss.

Caching challenges

Even though caching is a fairly simple way to improve the performance of a system, like any software solution, there are a few challenges related to it that need to be seriously addressed when it comes to implementing such mechanism.

Choosing which data to be cached

The first thing to be aware of is memory management.

Indeed as the RAM space is usually quite limited and even more if shared between multiple applications running on the same machine, we really need to pay attention not to fill the cache up too rapidly with large data — which naturally leads to identifying which data to put in the cache.

In principle, the cache should always be ready to store frequently required data taking a large amount of time to be generated or retrieved, but unfortunately, identifying this data is not a trivial task and will require several rounds of development until finding the right values to store.

Maintaining data coherence

The second challenge is to define how long data should be cached for based on how much time we can tolerate to serve potentially stale data to users as indeed, having two copies of the same data will ultimately result in them diverging over time.

We therefor have to define the best cache update or invalidation mechanism in order to avoid throwing our system off balance by storing corrupted or outdated information into our databases.

Dealing with cache misses

Finally, the third challenge is to keep cache misses low, as requesting data that’s not available in the cache adds unnecessary requests round-trips, introducing latencies that would not have been incurred in a system not using caching whatsoever.

So, to fully benefit from the cache speed improvement, cache-misses must be kept as low as possible compared to cache hits.